What do when you don’t know what to do? Even worse: What do you do when people are paying you to do things, and expect you to do things, but you don’t know what to do? Are you in a brand new domain? A brand new type of business or a completely new role? What about if you’re in a completely new industry?

Having just started a new role, the pressure to deliver and deliver quickly can be quite daunting. Using hypothesis-driven processes in your daily life can ease the tension.

A few caveats before getting started:

- This approach works best if your manager supports you. It can you can do it by stealth, or without asking (sometimes it works best if you don’t ask). Often you’ll get the best results if you bring the method to your team - that’s any managers you may have, as well as anyone that reports to you, or colleagues with whom you work.

- The stories I tell are from a software context; I believe that these processes can be used in different settings, but remember to be thoughtful about how you do that, don’t forget to test and adjust as you need.

Definition: Hypothesis

- A supposition or proposed explanation made based on limited evidence as a starting point for further investigation.

- A theory before it’s tested. A hypothesis like a theory should have both explanatory and predictive value.

In your everyday work, you have some impacts we want to achieve, be they strategic (increasing profit or reducing cost) or tactical (increasing daily active users by some proportion). Traditionally you might approach those goals any number of ways. You think up a solution and execute on that. You call time on the mission and move on to the next thing. And the next thing. And the thing after that. Project managers often get a bad rap for this, but it’s symptomatic of the business rather than the project manager in many cases. Those managers aren’t being incentivised to return to things and assess benefit since, in many cases, the contract has already paid out and to do so would not get them any more value for the business.

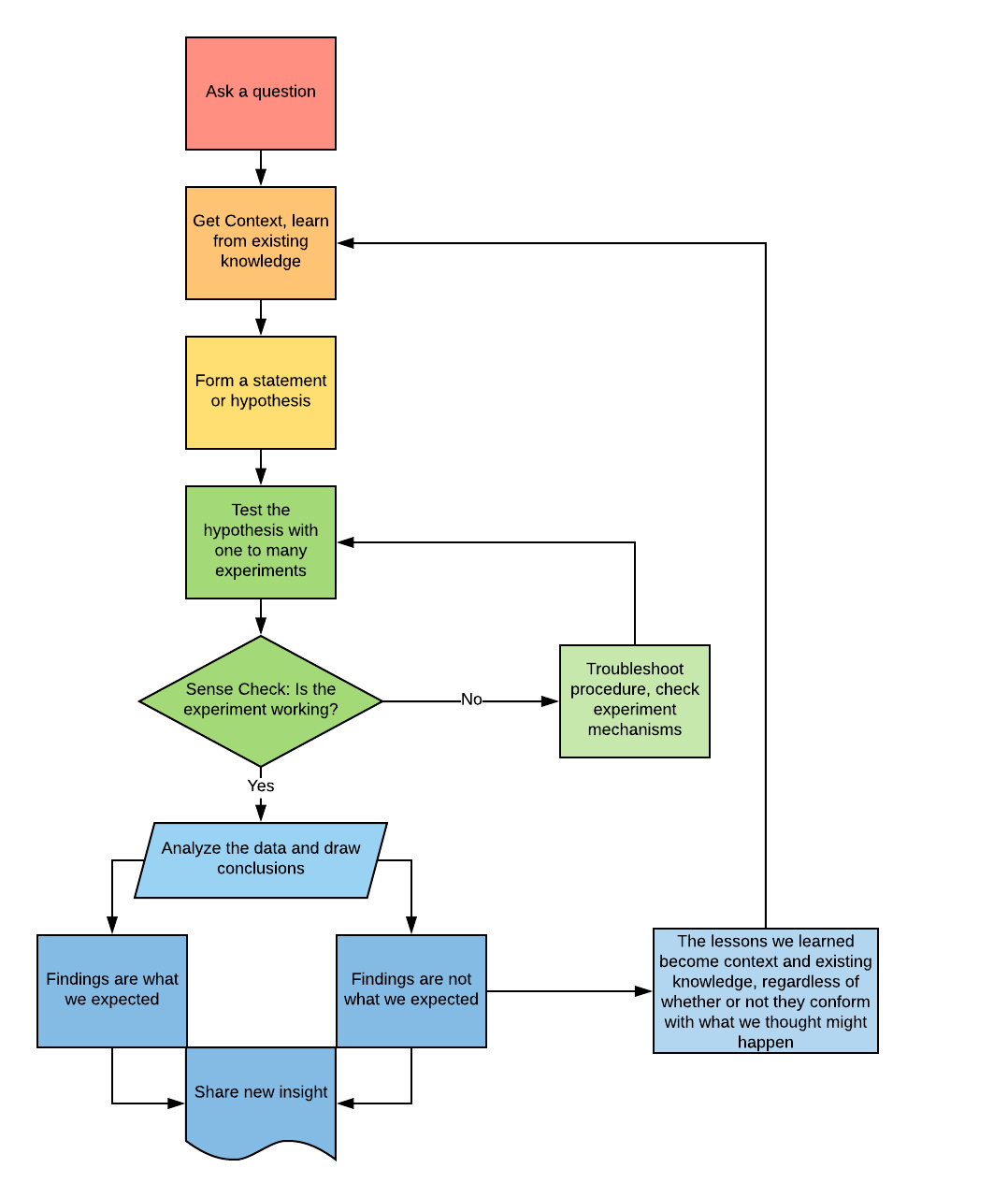

Enter stage left, the scientific method

For hundreds of years, people who have no idea what to do, have figured out what they need to do by using the scientific method. It looks something like this:

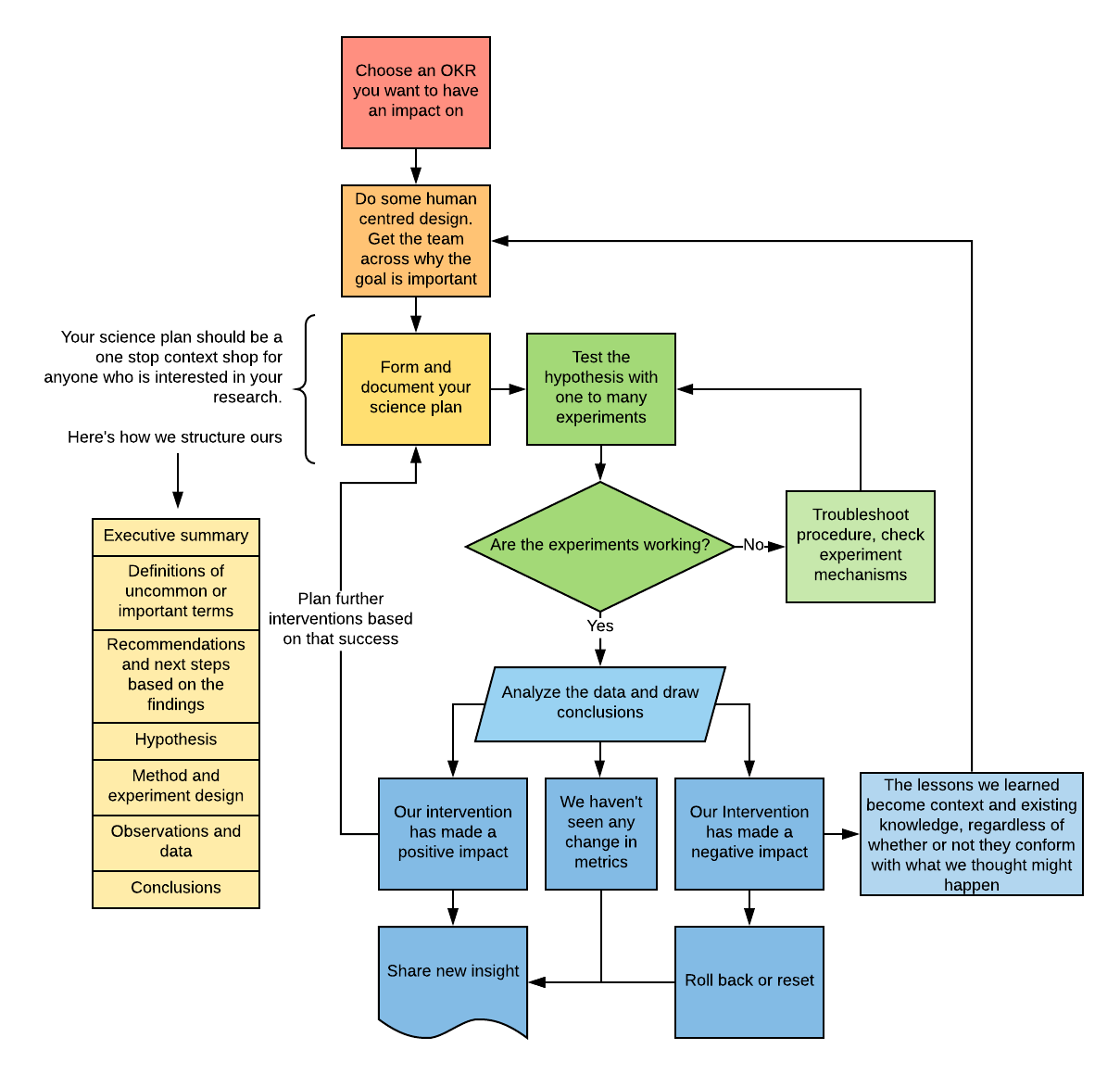

I’ve made some slight tweaks to the technique, the team here use this flow to manage the delivery of one to many features that will result in actionable insight. We’ve found that building a process where we are regularly looking at the outcomes we’ve achieved in the context of our goals, and work remaining helps us keep on track. Being mindful of business objectives reduces context switching - when the business makes requests, we can point them to data if we have it on why the demand may or may not affect the goal, we can also use the findings to help us prioritise. Those findings show financial value to the business and value to the customer. If someone asks us for some work, we’ll be able to look at the cost of that work, the opportunity cost and the likely benefit we might derive and give data back on how that will affect the backlog and the results we might see from the team.

The Method

Start with an impact that you want to have on someone - it could be a staff member, a customer or some other kind of stakeholder. Once you’ve defined the impact you want to have and formed that into a goal or objective and key result, you can start building context.

Doing background research and getting that context can often be the most costly step in terms of time spent. If there is a mountain of existing data to trawl through, it can be quite a tedious process. Persevere! It’s worth it. The more insight into the issue you can get before you form the plan, the better. When I’m going through this process, I’ll skim the available documentation first and ask myself “Will what I’m learning right now make me adjust the plan, or does it answer my question or address my goal some other way?” - If the answer is yes to either of those things, it’s worth the extra time is taken to make those adjustments. In some cases, you can skip the experiment altogether and prove out your goal by combining multiple existing sources of data in a new way. The time and cost of gaining that context can save you the time and cost of the rest of the process. You can skip straight to the analysis and dissemination of that knowledge.

Forming your plan is where most of the risk in this process sits. If you are missing crucial data, it can skew the method such that the experiments become questionable. If you’re doing an investigation into how to reduce the number of times a boy cries wolf; You should try to understand that humans lie a bunch. You might miss that sometimes wolves appear despite the local shepherd boy having no credibility. Standing in a field cataloguing the presence or absence of Canis lupus won’t get you closer to an impact or answer.

Further, if your plan is missing crucial information on what data needs to be tracked to prove out the experiment, you’ll struggle to show that the desired impact has been affected.

In an ideal world, you should have a strong hypothesis that can be tested independently of other work going on, that hypothesis should relate to the goal, and the experiments you design should provide you with the data you need to validate or refute the hypothesis. Sometimes it’s hard to get the information you need, or it’s hard to run the experiment in isolation from other changes. That’s okay. From the research you’ve done getting to those points, you should have a good feel for how to mitigate those concerns or make a call on whether or not the experiments should proceed.

After you have designed your experiments, the next step is to run them as per the plan. It’s essential that during this time you rigidly stick to the program (unless it has become unsafe, unsound or otherwise untenable). As you run those experiments, it helps to note down during the process any points for improvement for next time. If the tests are not running as you expect, those points you’ve noted for troubleshooting or development will help the experiments and the way you design and reason about trials evolve as your understanding grows.

Your experiments have run the course; now it’s time to analyse the data. How you might do that is a little out of the scope of this. There are several methods for synthesising that information in both qualitative and quantitative ways. Once that process is complete, the write-up can begin! It’s essential that during the write-up you clearly and plainly state one of three things:

- Has our intervention made a positive impact (i.e. are we closer to our goal now?)

- Has our intervention made a negative impact (i.e. are we further to our goal now?)

- Has our intervention made an impact that we can see? (i.e. have we been tracking the right metric in regards to the impact?)

If you can clearly state one of the above and back that up with the raw data that proves out that point, the rest of the write-up falls into place. You can spend more time talking about what happens next, and more time socialising those results. Either way, it’s essential that the research you have created is filed away in a place it can be easily referred to, as it now forms part of the ecosystem of knowledge you have to draw on for the next goal, hypothesis and experiment.

The Difference

he difference between these two processes is less about the process and more about the context. It’s relatively safe to assume that if you’re doing science in a scientific domain (at a university, technical institution or somewhere else) you often have a large body of work to draw on that is formally named, has been cited a bunch and published.

Sometimes in a private company, you don’t have that body of knowledge to draw on. Maybe you have an internal wiki, or perhaps documentation is spread across a bunch of shared drives. This process gives you a place to start. Starting with this process will help you generate that body of knowledge (which you should then store in a tool that can be accessed by whoever needs it). Being able to draw on that research takes the ego out of conversations about how to achieve goals. Having a team follow this process can also help you avoid distraction, as it’s clean, simple and ideally short if you’re working down in detail. If you’re not working at that tactical level, you can also use this process to plan work out across the business - for each high-level initiative, you might repeat lower-level versions of this initiative many times with different foci.

It’s important to note that there is no failure case in this process. Should an experiment not yield a result you expect, that’s okay. There is no value judgement or loss of face there since we acknowledged at the start that the hypothesis is only a best guess and that ultimately the data will tell us the answer. Regardless of the outcome that knowledge gets stored and becomes part of the institutional experience that can now be built up - help others in your organisation, use your lessons in their work.

In this way, we can start creating an ecosystem of research that we can use to reinforce our learning and experiments. It means that we can build and extend on our body of knowledge each analysis we run, in a way that relies on empirical data and processes that can’t be argued with or litigated (assuming the methodology behind your research is sound). If your organisation has a problem with pet projects that deliver questionable value (I’ve seen and heard about this problem in many organisations), this process is excellent for having a conversation about whether or not this is the best thing to be doing with the resources available to the business.

Speed to Market

Executing work in this way can be as slow or as fast as your business needs it to be. For domains that require high certainty and minimal risk, we can make choices about how long we run experiments for, we can limit the number of users involved in the research, and the defined process can help you include other parts of the business. As part of the context building step of your experiment, you can reach out and involve other teams or people if you have that specialisation available, and if you don’t, that’s okay too because there isn’t a commitment to the experiment long term unless that suits the business.

Should you be in a domain that encourages rapid iteration, short experiments with a large number of users can help you sense and respond rapidly to the market. Borrowing heavily from lean methodologies, you can focus your effort on the successful experiments and quickly discard ideas that do not help you achieve your goals.

You still need to prioritise

Applying the scientific method in your role doesn’t replace the traditional process of prioritising work. It remains vital for everyone involved to understand what the most important thing to do is and why it’s crucial. Whether you use the Kano model, Outcome-Driven Innovation or something else entirely, those goals still need to be looked at in comparison with other purposes. This method tells you whether or not you’re moving the dial, and whether or not you should keep trying to move the dial the way you are currently trying to move it, or if you should change or stop.

Conclusions and key points

The scientific method is an established, proven way of learning new things. This method is something that can be used in a business or technical context as much as well as in academia.

Using this process, or basing your process on the method can help you understand new situations and contexts in a way that is safe, rapid and applicable to many domains or industries.

It helps if you’ve got buy-in from your organisation, but ultimately you can use this process to guide your work internally within your team, or on your own, if you don’t work as part of a team.

This process helps you define a goal, and plan interventions to shift a metric on that goal - it then encourages you to show others the results and use that new knowledge as part of your future work.

References and further reading

[1] Hypothesis-driven development - Dr Dobb’s World of Software Development

[2] Experiment driven development - Sebastien Auvray, InfoQ

[3] How to implement hypothesis-driven development - Barry O’Reilly, Thoughtworks