Better buttons, cleaner HTML markup, stricter adherence to WCAG - we’ve been investing in digital accessibility for over twenty years, and that investment has opened doors for millions of people worldwide.

We are nearing a plateau. I believe that the next order-of-magnitude gain in accessibility won’t come from ever-tighter contrast ratios or perfection in semantic HTML.

We’ve reached the point of diminishing returns. We might build current best practice levels of accessibility faster and more effectively with better technology. Still, we are bound in our current approach to accessibility by the interfaces we ship. The next leap in accessibility cannot come from better execution of traditional interfaces; it can only come from changing the interface. Changing the interfaces means making them adaptive. Making them personal and responsive in ways that statically designed software can never achieve.

That change will come from agents. Agents are the next step in digital accessibility.

By agents, I mean allies that help you and increasingly act on your behalf. These agents will translate, reshape and personalise every interface an individual might need. In some cases, that agent will be your personal agent; in others, it will be an agent provided for you to use by the company you’re interacting with. Either way, these agents will be accountable for ensuring that the things you need to interact with, screens, video, forms and more, will be exquisitely tailored for your unique needs.

At one end of the spectrum, that might mean synthesising the information you’re reading with relevant insights from elsewhere on the internet.

On the other hand, it might mean actively interpreting for you, turning information that was previously inaccessible into something you can use instantly.

Imagine a world where anyone with macular degeneration can fill in their Lotus Notes timesheets!

Or where your personal agent spends a day learning your unique colour perception profile, then shares it with Netflix’s agent, which re-colours every show you watch to give you the most vivid picture possible.

If agents can reshape interfaces in real time, they don’t just make things easier; they change the rules of interface development. The most significant rule they will break is the tradeoff between usability and accessibility.

Dissolving the accessibility-usability dilemma

For years, we have wrestled with a false choice: make a product fully accessible and risk adding friction, or make it frictionless and knowingly exclude users. Agents dissolve that tradeoff. They will adapt to interfaces and needs in real time.

With the right tech and design, agents can deliver rigorous accessibility AND effortless usability simultaneously. The same agent that makes Lotus Notes usable for a user with macular degeneration can also streamline the workflow for a power user by skipping the UI and hitting APIs directly - without forcing either of those users to compromise.

To make agents truly helpful, we need to give them the same usage cues we give humans today, but in a form that agents can read and act on. In design, we call these cues ‘affordances’.

What are affordances?

Affordances are the characteristics or properties of an object that suggest how we can use it. They show a user that an object can be interacted with.

As such, an affordance is not a “property” of an object (like a physical object or a User Interface). Instead, an affordance is defined in the relation between the user and the object: A door affords opening if you can reach the handle. For a toddler, the door does not afford opening if she cannot reach the handle.

An affordance is, in essence, an action possibility in the relation between user and an object.

When your software’s user is an agent, the affordances you build must be designed for machine interpretation and action, not just human user perception.

Let’s look at three areas where agent-friendly affordances unlock capabilities beyond human limits, starting with precision.

Beyond-Human precision

Today, surgeons use machines in surgery where human hands lack the precision needed to achieve the work safely. Tomorrow, a world awaits us where NOT using a machine will be considered malpractice.

Imagine that conversation between two surgeons, “What!? What do you mean you didn’t use the robot to suture that vein back together! You just aren’t that accurate! No human can be after three hours of surgery!”.

Hopefully they aren’t as aggressive looking as this one.

What would the world need to look like for this to be possible?

We would need to have built the affordances necessary for an agent to interact with external tools and the patient. We would need safety and security guarantees, and we would need signs of confidence and doubt so that humans can review and intervene as needed.

In short, we would need equal affordances for the robot and for the human. We might need:

- Some kind of semantic data mapping of the human body that an agent could understand and apply knowledge to (Agent: “the patient’s blood pressure is stable, if it drops, I need to check for bleeding and respond with the appropriate tool immediately”)

- Tools that provide telemetry and other feedback via APIs (Agent: “Anaesthetic is being consumed at the expected rate given patient physiology, if rates change up or down, I will pause and escalate for human review”)

- A state machine for agents (Agent: “The state of the cardiopulmonary bypass machine is 12, subcomponents are in state 3, 7, 16 - I have mapped out a response plan if these states change.”)

- Insight into control states for humans (Human in the loop: I can see that the robot is in a planning state for the following surgical task and is anticipating three scenarios. I can see the plans for those scenarios and have pre-approved those actions only)

These examples show that the affordances we need for agents are the same as those for a novice surgeon. We need clear insight into the “headspace” of the novice, we need the novice to understand the capabilities of the tools they have at hand as well as how they can use those tools to achieve their plan (i.e. the theory knowledge and the applied knowledge), and we need someone with experience who can provide feedback in the moment that improves the performance of the novice. None of these affordances are new; we’re just applying them to computers that we use to apply only to humans.

If precision is about moment-to-moment execution, diligence is about never missing a detail, no matter how long you look or how deeply you dig.

Beyond-Human diligence

My team is currently building payroll software. It may be the first agentic payroll software in Oceania, if not the world. We’re planning and actively investing in a world where we can ensure that you are paid correctly by inspecting every single pay packet you have ever received, every time we calculate your next pay packet. A world where we can review 10 years of your tax history, 10 years of superannuation contributions, and 10 years of deductions and bonuses. Every single number that has ever been associated with you and your pay, for 10 years or more.

Payroll is a highly complex, compliance-heavy domain. Mistakes trigger legal penalties that can run into the millions. In Australia, if a company pays an employee incorrectly, the individual payroll staff member involved in that transaction can be jailed!

The pressure to get it right is massive, and the longer your employee is with you, the more data you have to check and re-check every time you pay them. Many of my customers have been with us for over a decade!

Can you imagine a human reviewing that for ten, a hundred, a thousand, or ten thousand employees? Picture this: It’s the end of the financial year. If you are in agriculture, this is your equivalent of moving day. If you’re in retail, it’s Black Friday, and it’s flu season in healthcare.

You’re the CFO. Your payroll manager walks into the room and says, “We’ve reviewed 100% of the payroll transactions we’ve made for about five thousand staff employed in the last seven years against every change in tax and holiday law in that period. We found three issues and fixed them. You can sign off on the end-of-year accounts.”

Previously, the payroll manager would have sampled the data with the risk of issues slipping through the cracks. In most cases today, your end-of-year accounts process is focused on the yearly period in question, and the amount of reports and data needed is enormous. Most companies (at least in New Zealand) can’t afford to hit the depth level they would like, which is why we have auditors routinely come through and do things like Holidays Act reviews and remediation.

The affordances we need for this level of automated compliance audit look very different to the affordances required for surgery.

We need:

- Immutable event logs (time-ordered records that afford the agent insight into the history of the employee they are checking)

- Semantic tagging of transactions (to convey intent and interactions around pay configuration)

- Rules versioning (to ensure the agent understands how to reconcile changes in the legislative environment against changes in configuration)

- Scalable Audit APIs (to simplify data access for the agent and provide interactions with other services)

With this level of diligence, when the auditors come knocking, the CFO is ready and can confidently prove every decision and every cent spent.

Now imagine what happens when this diligence is applied globally across industries. That’s how supercycles unlock new markets.

Beyond-Human eyesight

Agents can breathe new life into software you can’t use just as well as it can solve for software you hate using - without having to rewrite it from scratch.

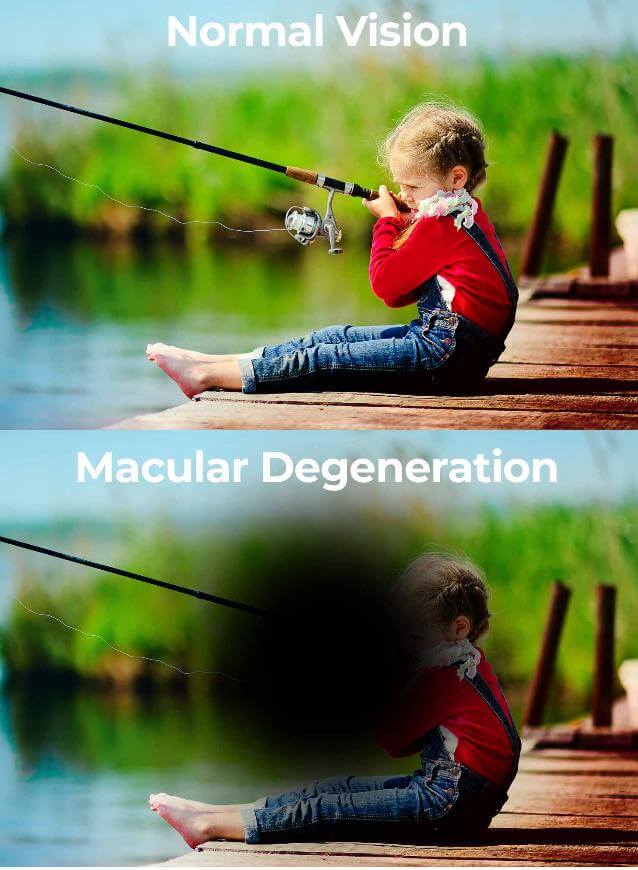

A 2014 study estimated ~10% of New Zealanders 45-85 suffer from macular degeneration.

Macular Degeneration New Zealand estimates that 10% of the 45-85 age bracket suffers from macular degeneration. That’s ~3-4% of the total population of New Zealand. What would it be worth to your company if you could increase the available market for your product by ~3-4%? If you’re selling a B2B SaaS product and you’re stuck with a system whose accessibility is poor, a 4% increase is massive.

Previously, unlocking that new market would be expensive! You either retrofit accessibility into a system that was never designed for it, using technology that was never built for it (anyone remember Adobe Flash? Good luck making that screen-reader compatible), or you rewrite significant portions or the entirety of that product!

With your own personal agent the story is very different. IBM is never going to update your particular version of lotus notes, and yet every fortnight, with an eye condition like macular degeneration, you’ve got to fill in your time sheet. You’re a skilled analyst, but this clunky, inaccessible user interface turns a ten-minute job into half an hour or more of struggle, every week, every fortnight, until you die or leave the company.

Not only can agents dissolve the accessibility-usability dilemma, they can give you a usable facade over unusable software. Your agent can consume the underlying APIs that Lotus Notes uses, unlocking a natural language interface to your timesheets. Whether it’s text or voice, you ask your agent to fill in your timesheet, you’ve worked on these three initiatives this week, and the time split is around 20%/40%/40%, and the agent converts that into the API calls needed to fill in your timesheet. If I were making ANY timesheet software today (#startup-idea), I’d bin my UI and create MS Teams, Slack and Discord bots that convert your text or voice into valid timesheets. Not Hipchat or Flowdock though. No nice things for those people.

Flowdock aside, we have a moral imperative to make these systems accessible; it is the right thing to do.

This principle works for any legacy software that can make rapid accessibility gains, to say nothing of software built with accessibility at its core. Eyesight is just one example. Every tech supercycle has expanded access or created new markets, and with agents, we’re about to see it happen again.

Accessibility remains a moral and commercial imperative

Consider this: I’m a company that today sells inaccessible software as a service. I charge $20 monthly, and New Zealand has about 5 million people. Adding 4% of that population to my serviceable addressable market, I can now sell to 200,000 new customers. If I win 5% of those, I can add 10,000 new subscribers and increase my annual recurring revenues by $2.4m. The total addressable market of New Zealand - that five million - is small fry. In June 2024, Sydney had about 5.5 million residents. Accessibility is money. Pure and simple.

These supercycles - PC, e-commerce, Cloud Computing, Mobile Web, and now AI each unlocked new markets with brand new users and unlocked new interactions that expanded existing markets. They made previously inaccessible systems accessible for those who were previously excluded.

The PC created markets, and e-commerce turned local and regional markets global. Cloud computing unlocked new markets by drastically reducing the barrier to entry for brand-new types of companies. AWS had a five- to seven-year head start with startups that used cloud computing to reach new markets faster than the incumbents could keep up. Mobile Web put a device in the pockets of billions of people who previously might have struggled to buy expensive computer hardware!

Enterprises responded to these market changes by either building new products native to the ideas of that supercycle or retrofitting legacy systems with the tech needed to play in that new market, even if the outcomes weren’t as technically pure as building from scratch.

How to build affordances for agents

Affordances in UI/UX include clear and consistent navigation patterns, breadcrumbs, and semantic markup that conveys intent and relationships. In back-end services, affordances include machine-readable APIs with consistent patterns and discoverable endpoints, semantic metadata, explicit error signalling and recovery protocols, data provenance, and separation of commands from queries. All of these things help humans use and build systems today!

All of them are necessary for agents to use and build those same systems tomorrow.

Consider building a significant integration into a product or system never designed for it. If you treat affordances for agents today, you will feel the same pain tomorrow as you felt building that integration. Maybe more so.

We already strive to build clean interfaces with clear documentation and unambiguous behaviours. We do this for humans and third-party developers who want to integrate with us.

The only difference is that tomorrow’s third parties will be agents. Those agents will expect the same affordances we build today, and the customers those agents serve will expect that your software works with their agent (or your own).

So, what can you do today to best prepare? If you don’t happen to be rewriting your whole product from scratch, what can you do to ensure that your product meets the prerequisites for agentic success?

Concrete steps teams can take today

Audit current affordances to identify gaps in context or access.

In surgery, an agent can only act safely if it knows what tools are available, what states the patient is in, and what the patient’s vitals mean. If those affordances are missing, the agent is blind.

- Start by mapping the interaction surfaces of your products. Any place a human or machine can read, write or otherwise interact. Ask: If the user were an agent, would they have all of the information they need to understand what is possible here?

- Look for missing cues; this might mean e.g. unclear navigation, inconsistent APIs or documentation or poorly or uncommunicated state changes.

- Treat this like a penetration test, but for usability. You’re trying to find where an agent would be blind or blocked because of missing affordances.

Add semantic metadata, ensuring every entity/event is machine-readable.

Agents cannot guess intent. They cannot be aware of the conversations that were had in the room back during design time. They only have access to what you grant them at run time. Provide explicit signals for use that convey intent. Add semantic tags to your data models, events, and UI elements to make their meaning unambiguous.

For example, you might return a response to a UI that looks something like this:

{

"content": {...}, // omitted for brevity

"metadata": {

"workflow_stage": 3

}

}

In payroll, an agent can’t guess that "workflow_stage": 3 means “awaiting approval from a payroll manager”. Your UI might store an enum that maps 3 to something a human might understand, but your agent won’t know what to make of this. You might resolve this in a few different ways. One option might be to ship a representation of the enum class with every response, but I can hear the purists in the back groaning about extra bytes over the wire, so what if we just did this instead:

{

"content": {...}, // omitted for brevity

"metadata": {

"workflow_stage": 3,

"workflow_description": "Awaiting approval from a payroll manager"

}

}

This simple change tells us (and our agents) a lot! This is a payroll application, and we expect a manager to approve this task. An agent can then curry that information into whatever other process is needed. A small change can make a big difference.

Separate commands and queries to clarify state changes vs information retrieval.

In a legacy retrofit, an agent filling in a Lotus Notes timesheet should be able to read current entries without risk of accidentally writing changes. In surgery, separating “monitor vitals” from “adjust anaesthetic” presents a clear safety improvement.

Splitting reads from writes benefits modularity and encapsulation, scalability, and keeping interfaces small and clean. It can also substantially de-risk agent use of your software!

Separating reads from writes creates structurally clear and governable ways for agents to interact. Want a read-only agent? Scope its token to your read surface. Want your agent to plan before acting? Give it the context that API 1 is for planning and API 2 is for executing.

CQRS makes it easier for agents to plan, simulate, and validate actions before committing. We want agents to be cautious and measured, like human operators.

Expose intent surfaces, letting agents understand why actions are taken.

Agents are like my 10-year-old. He craves the “why” of things. Agents need that “why” as well, not just the “what”.

In our JSON example above, you saw that we exposed a metadata block in our API response. That metadata block is a crucial intent surface. Intent surfaces are metadata or API endpoints that explain the rationale behind specific actions we might take, are taking, or have taken in the past.

Consider this API response for a payroll adjustment

{

"content": {...}, // omitted for brevity

"metadata": {

"reason_code": "overtime",

"policy_reference": "HR-OT-2024"

}

}

With a response like this, an agent understands that we’ve adjusted pay because a staff member has worked overtime, and to ensure compliance, the agent can cross-reference (via RAG or e.g. an HTTP request to a document store) the overtime payment against the policy. It can do this as a matter of course for all overtime payments or only for anomalous overtime payments (because they are high, or low, or on an unexpected or unusual day of the week or for any other reason). The agent can do this check at audit time, or it can do it asynchronously while the pay run is still being worked on - so we can look back in history and spot issues AND prevent new problems from appearing.

Implement provenance hooks to track data origin and transformations.

Provenance is the audit trail for meaning. Every piece of data should carry its origin, transformation history, and trust level.

When data carries this information, agents can verify accuracy, detect anomalies and explain decisions to humans.

A medical agent deciding on a treatment plan (perhaps after the surgery we discussed earlier is complete) can trace lab results back to a specific machine, calibration date, and technician and flag if some aspect of that calibration might have a material impact on the patient’s well-being.

These steps aren’t solely about future-proofing in the abstract; they’re about making your systems agent-ready now. The teams that start today will be the teams whose products feel inevitable across the next supercycle. Everyone else will be scrambling to retrofit under pressure.

Conclusion

Take yourself back to the year 2000 once more. The iPhone is coming. Android is nearly here. What would you do if you were there again? I believe that we are there today. You might argue about the moral rights and wrongs of AI, or you might think that we are in a massive bubble. You might be right. But the e-commerce supercycle lasted a full decade after the dotcom bubble burst. The bubble was a harbinger of a changing world.

We are at the start of a new supercycle. A supercycle of agents, that I believe will be bigger than cloud computing, bigger than the mobile web, bigger than e-commerce. Maybe even bigger than the PC.

The Helpful Web: humans and AI allies expanding access for everyone. Accessibility isn’t just inclusion — it’s growth. Audit your affordances, design for agents, make AI your company strategy. In ten years, bypassing your agents will feel like malpractice.

References

[1] What are Affordances? , IxDF

[2] About Macular Degeneration , Macular Degeneration New Zealand